Exploration tool for Neural Network Verification (NNV) tool from Verivital Labs, Vanderbilt University.

nnv (Matlab Toolbox for Neural Network Verification) This toolbox implements reachability methods for analyzing neural networks, particularly with a focus on closed-loop controllers in autonomous cyber-physical systems (CPS).

Tools and Related Features

This toolbox makes use of the neural network model transformation tool (nnmt) and for closed-loop systems analysis, the hybrid systems model transformation and translation tool (HyST).

Novel Features

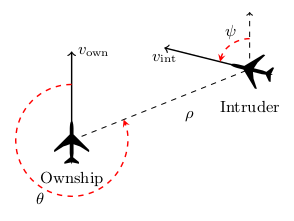

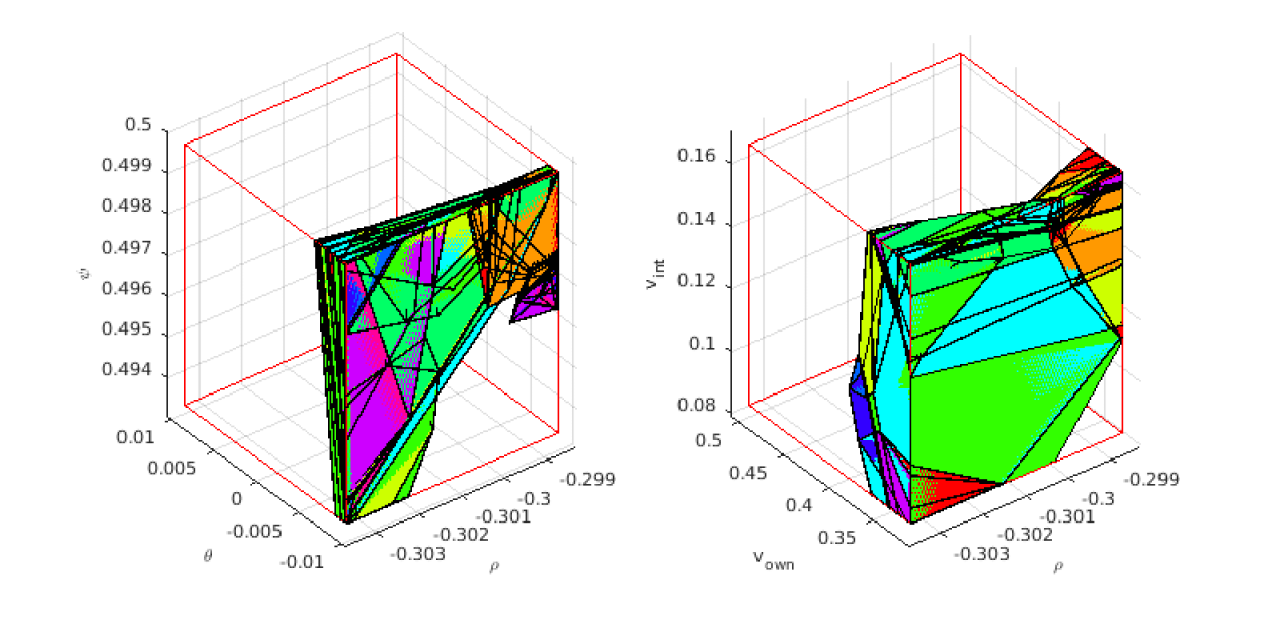

Vertical view of a generic example of the ACAS Xu benchmark set

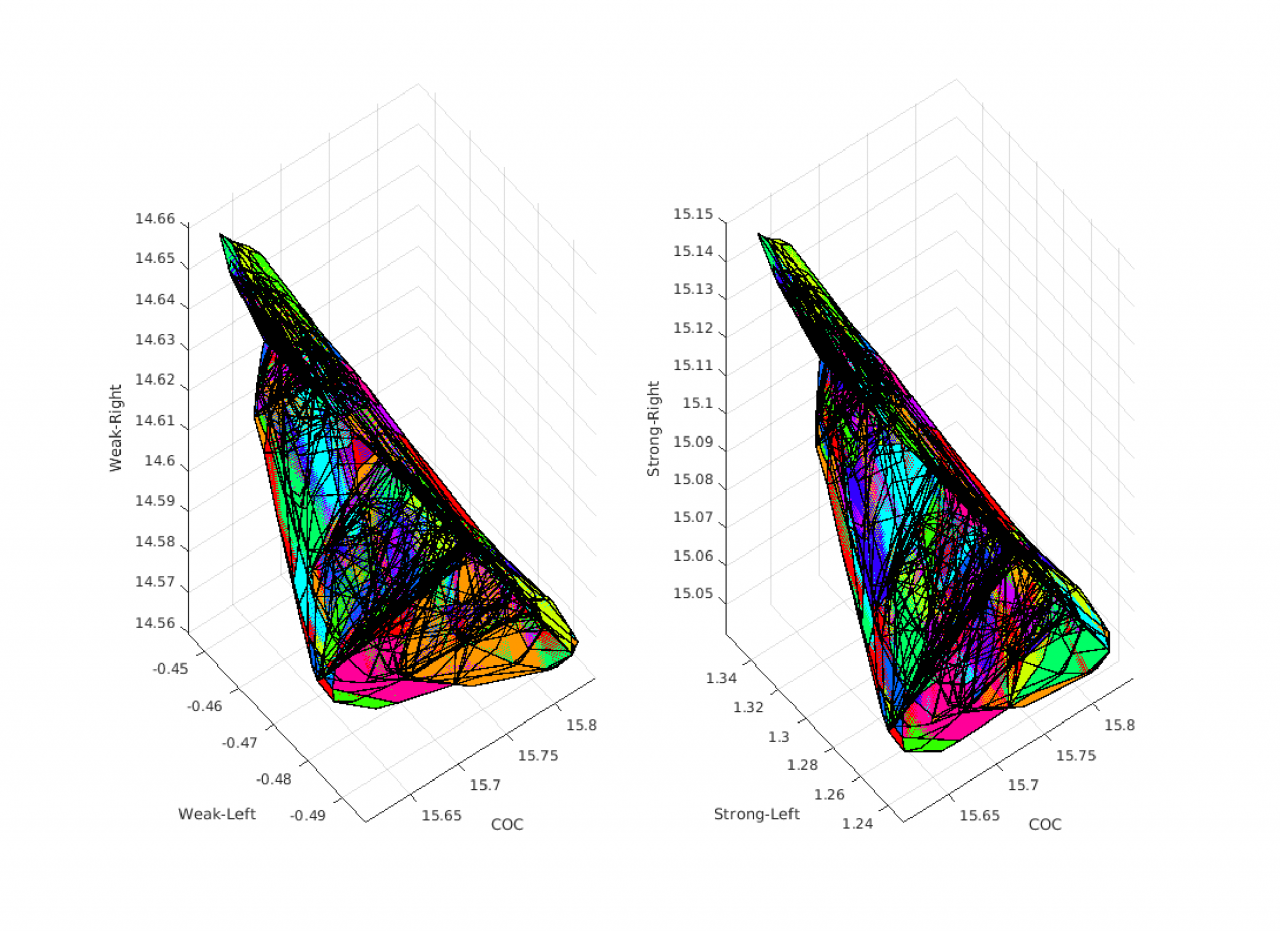

Exact reachable set of an ACAS Xu network

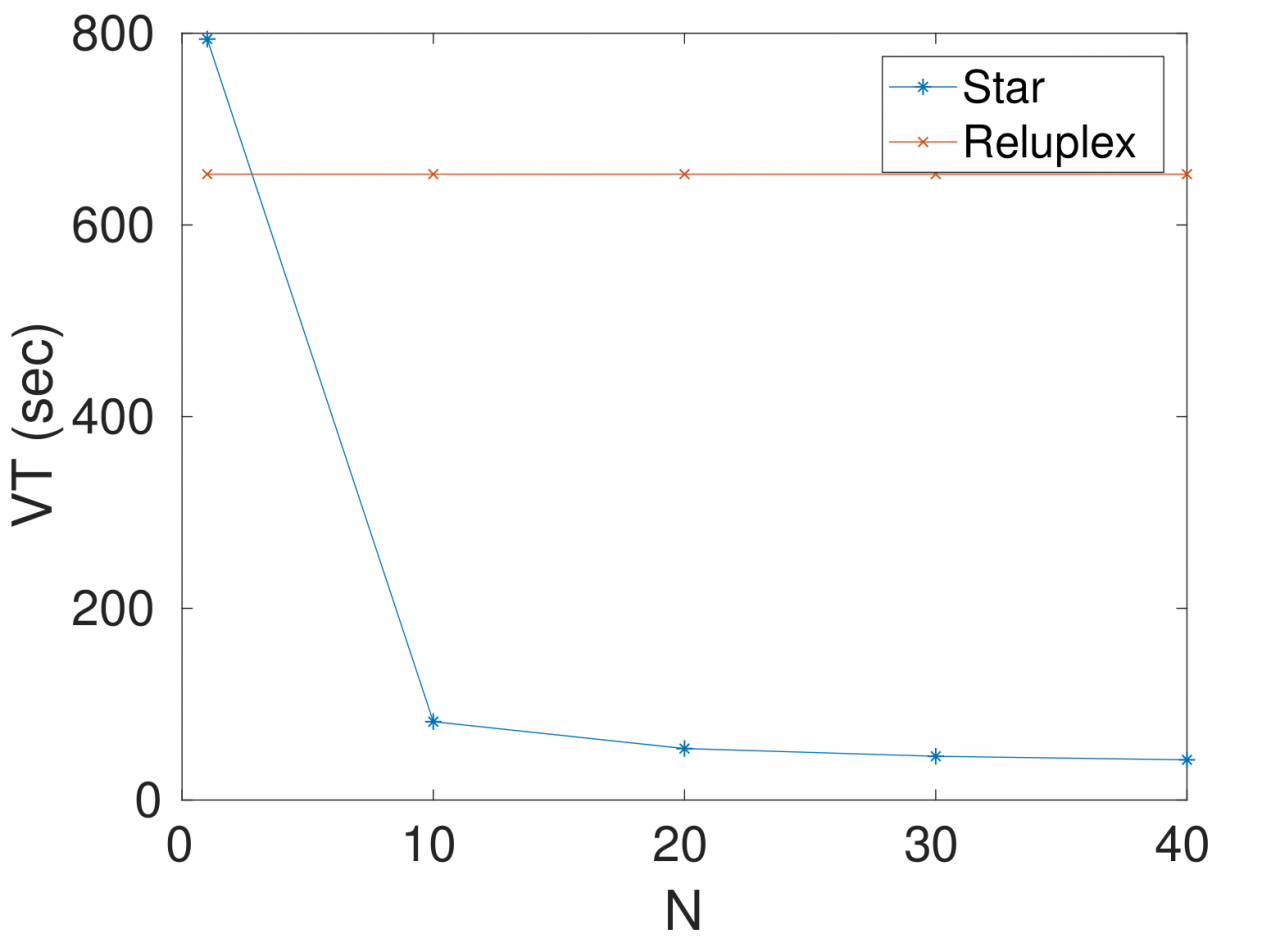

Verification time (VT) can be reduced by increasing the number of cores (N)

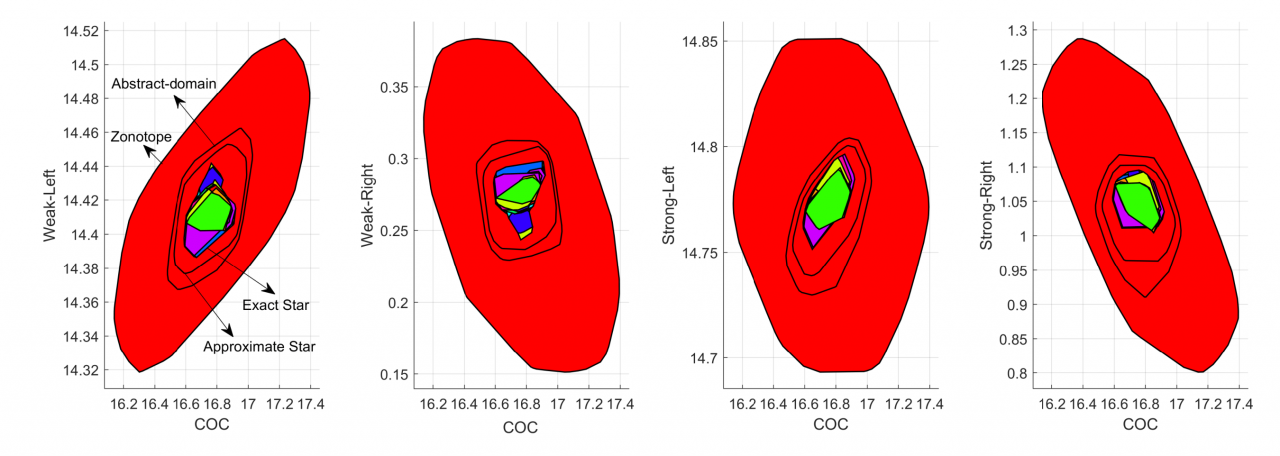

Reachable set of an ACAS Xu network with different methods

Complete Set of Counter Inputs of an ACASXu network

Reachable set of a network with Sigmoid activation functions

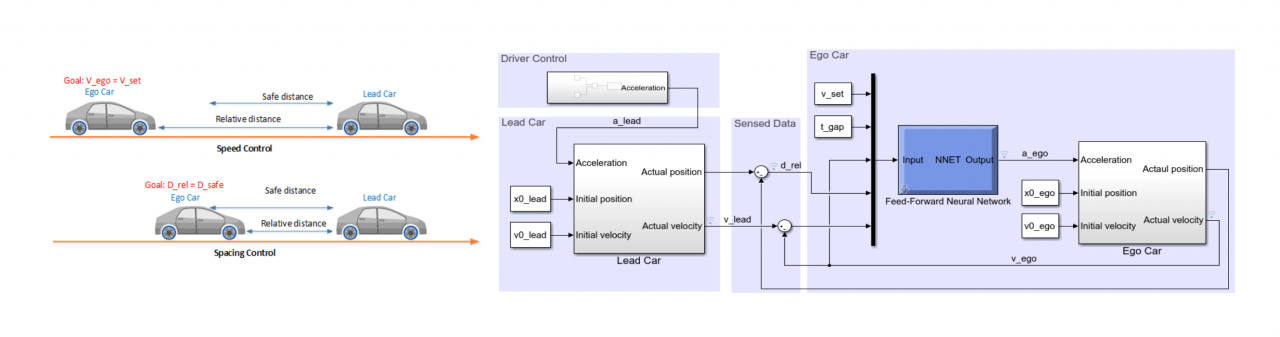

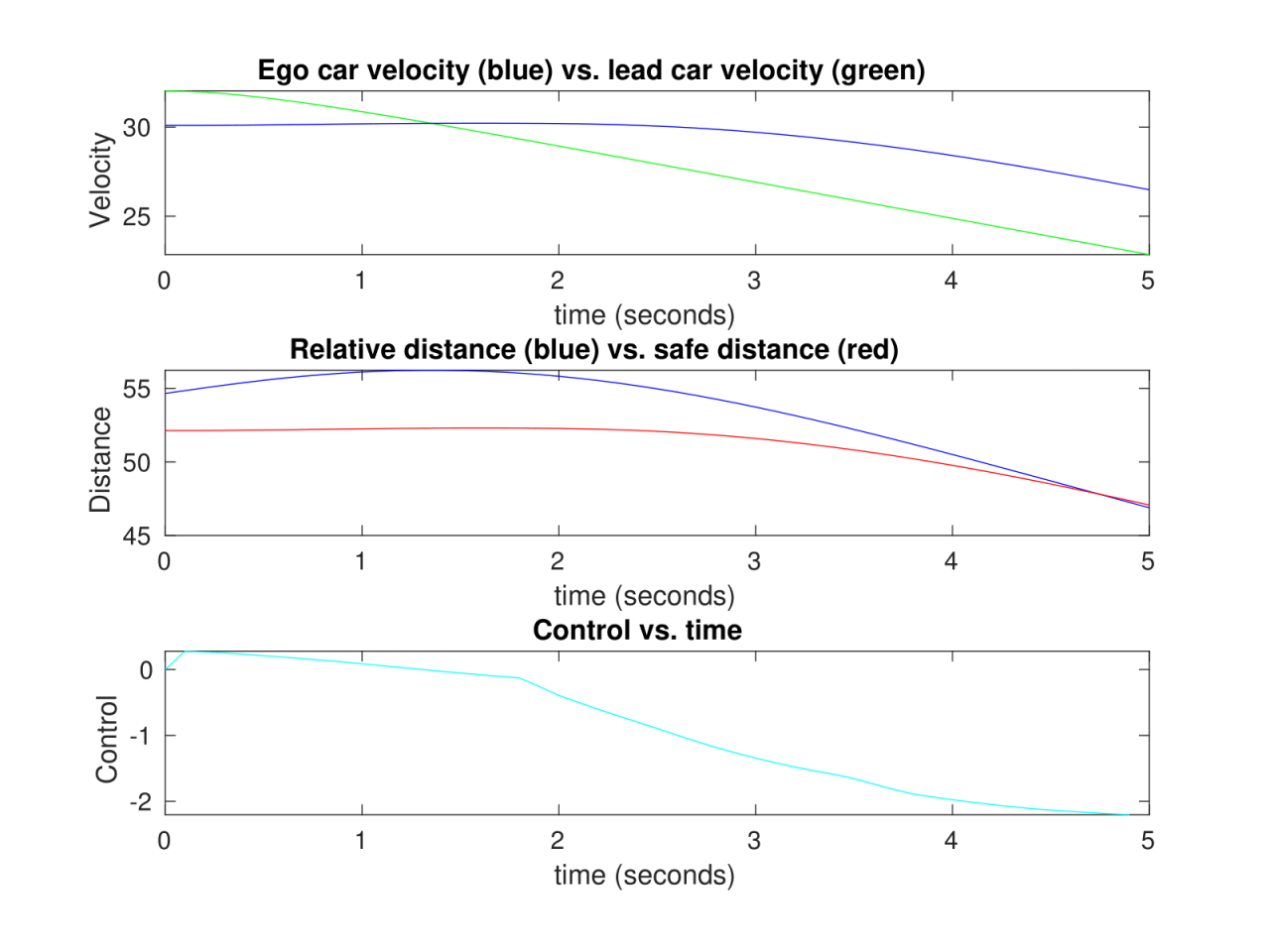

Neural Network Adaptive Cruise Control System

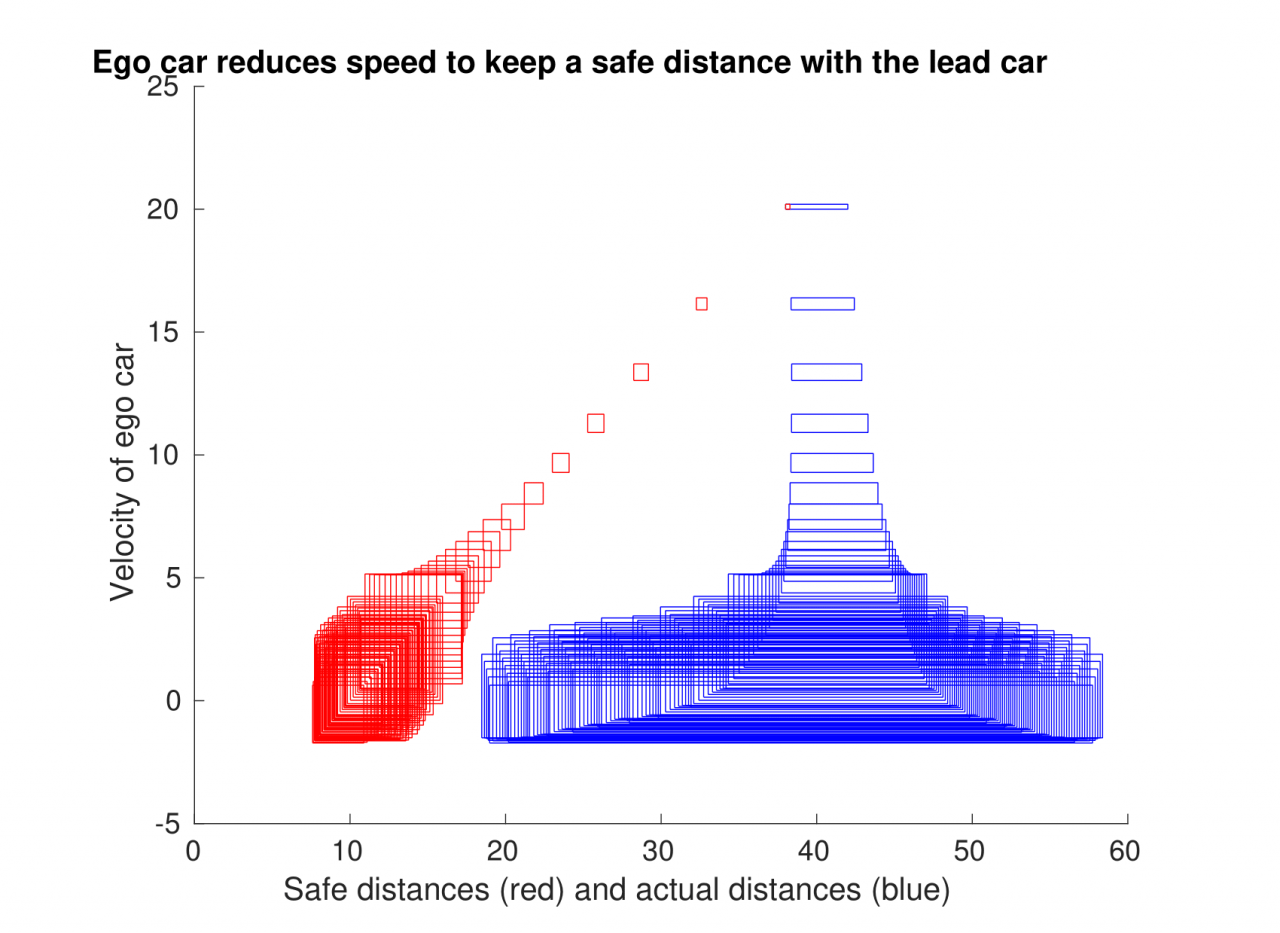

Reachable set of the neural network adaptive cruise control system

A falsification trace of the neural network adaptive cruise control system

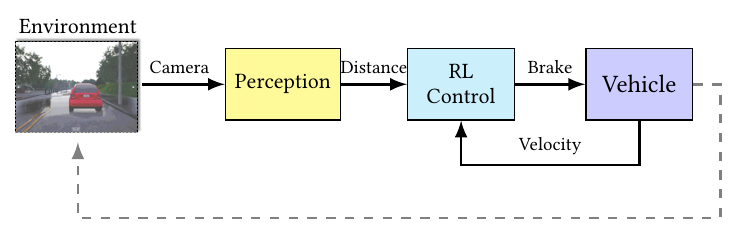

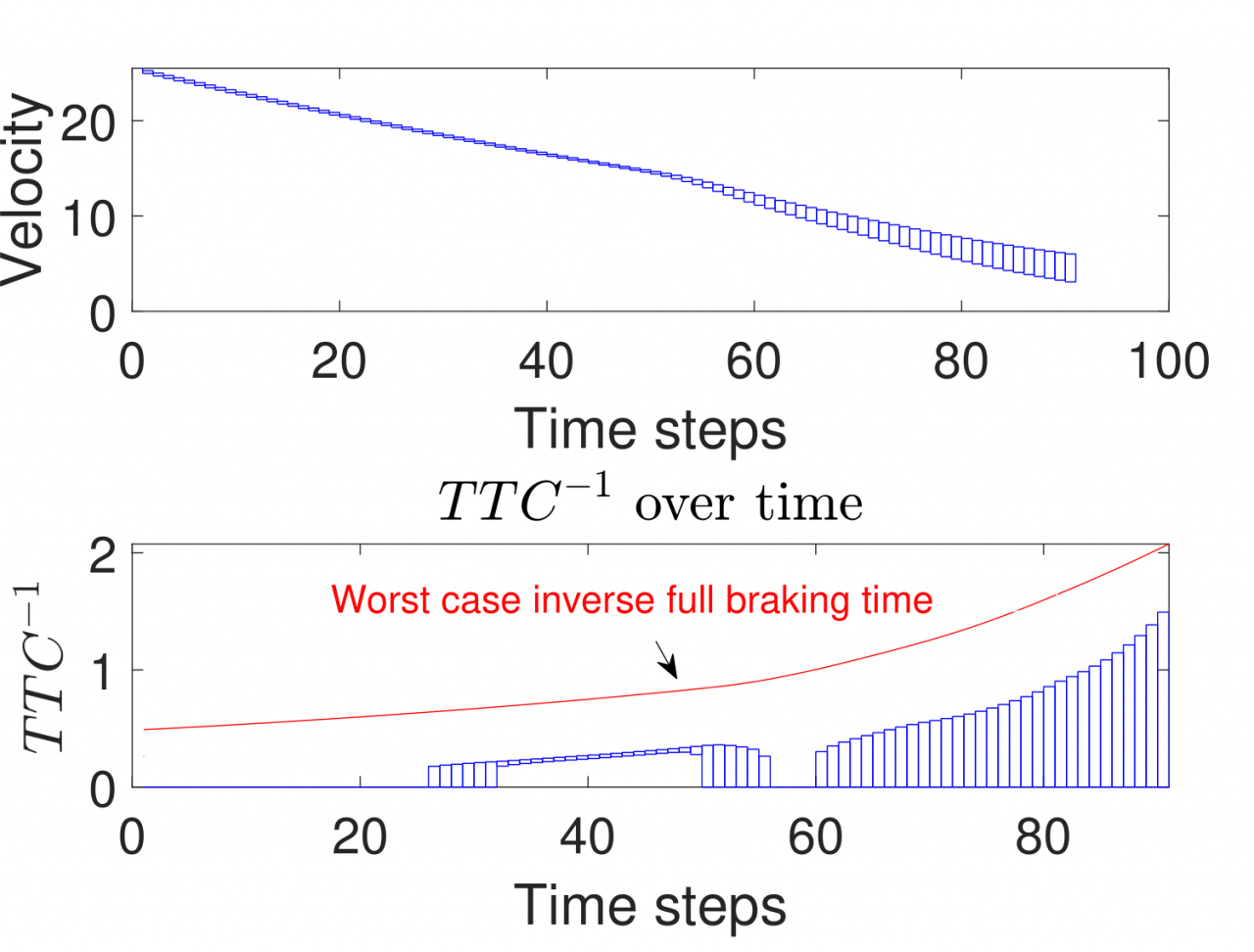

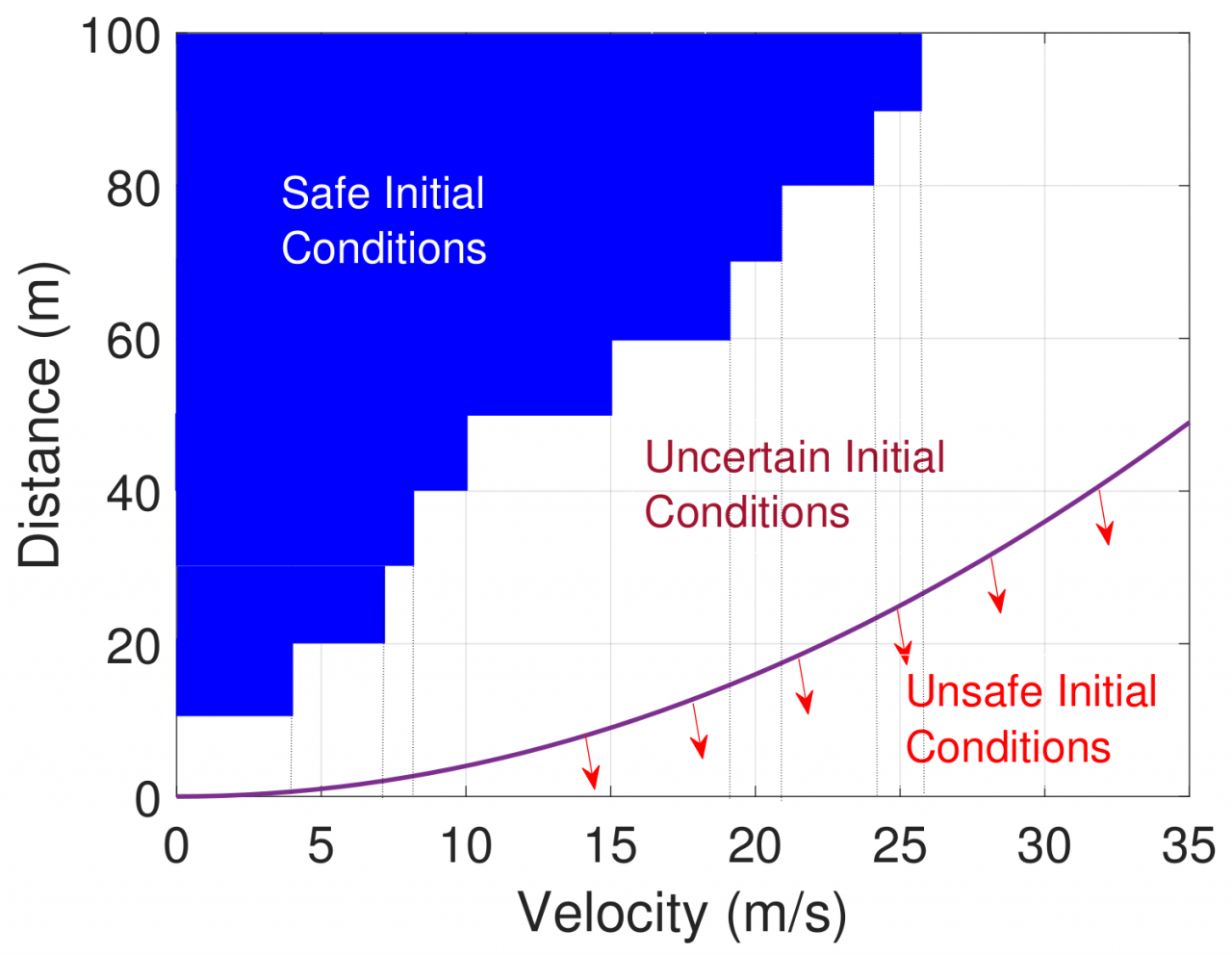

Advanced Emergency Braking System with Reinforcement Controller

Reachable set of the advanced emergency braking system with reinforcement controller

Safe initial condition of the advanced emergency braking system with reinforcement controller

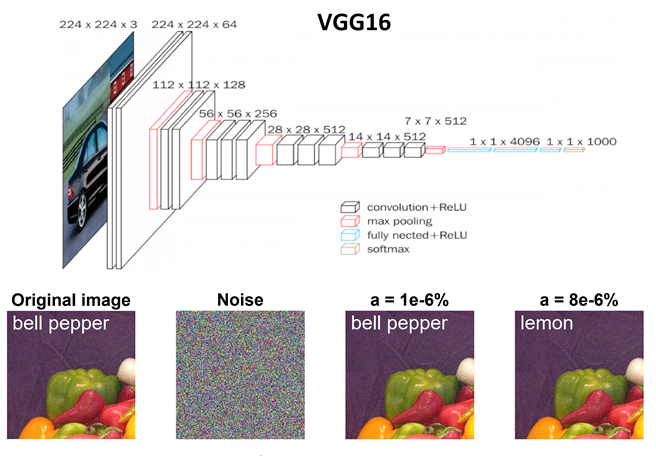

VGG16 under adversarial attacks

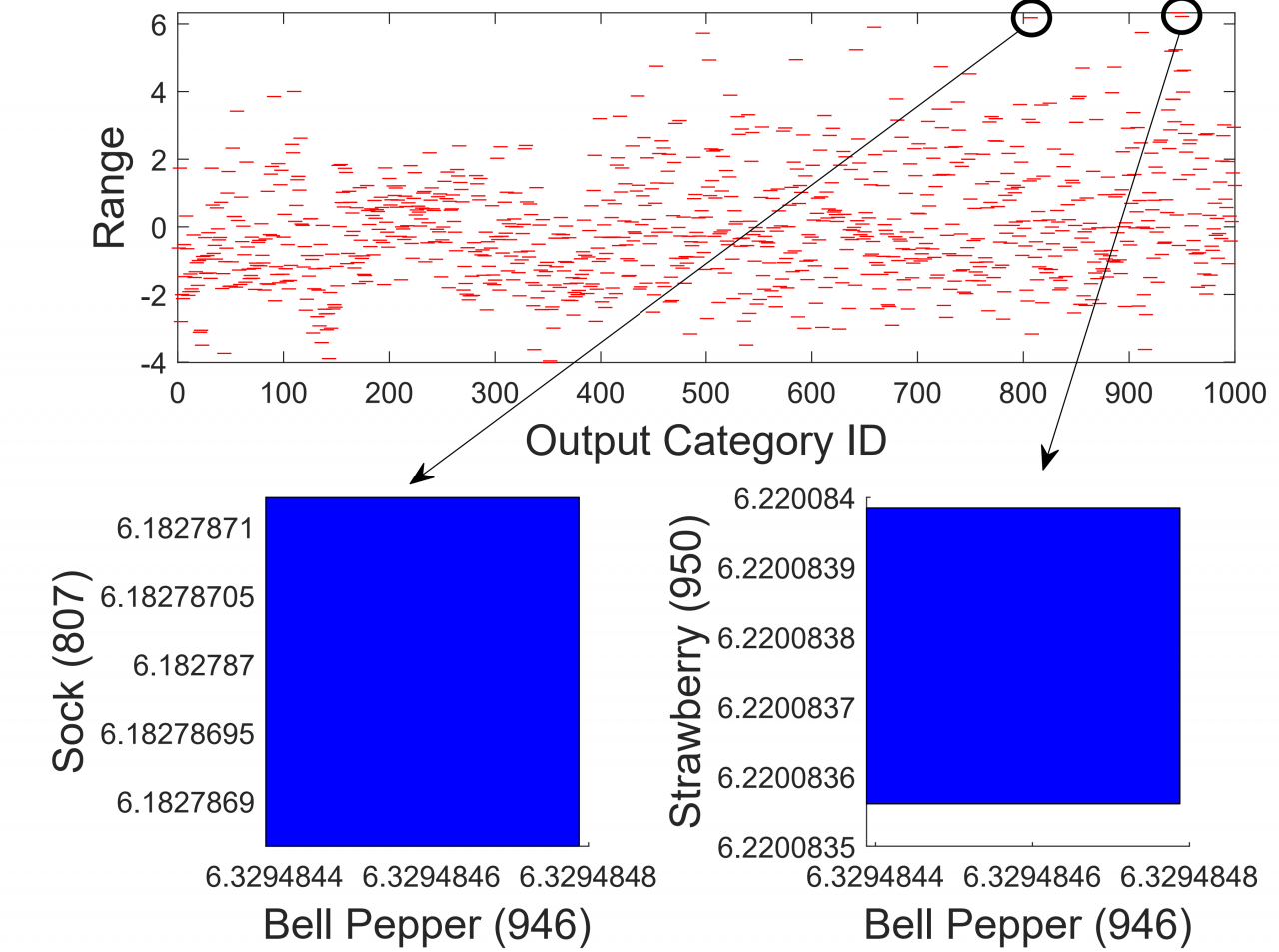

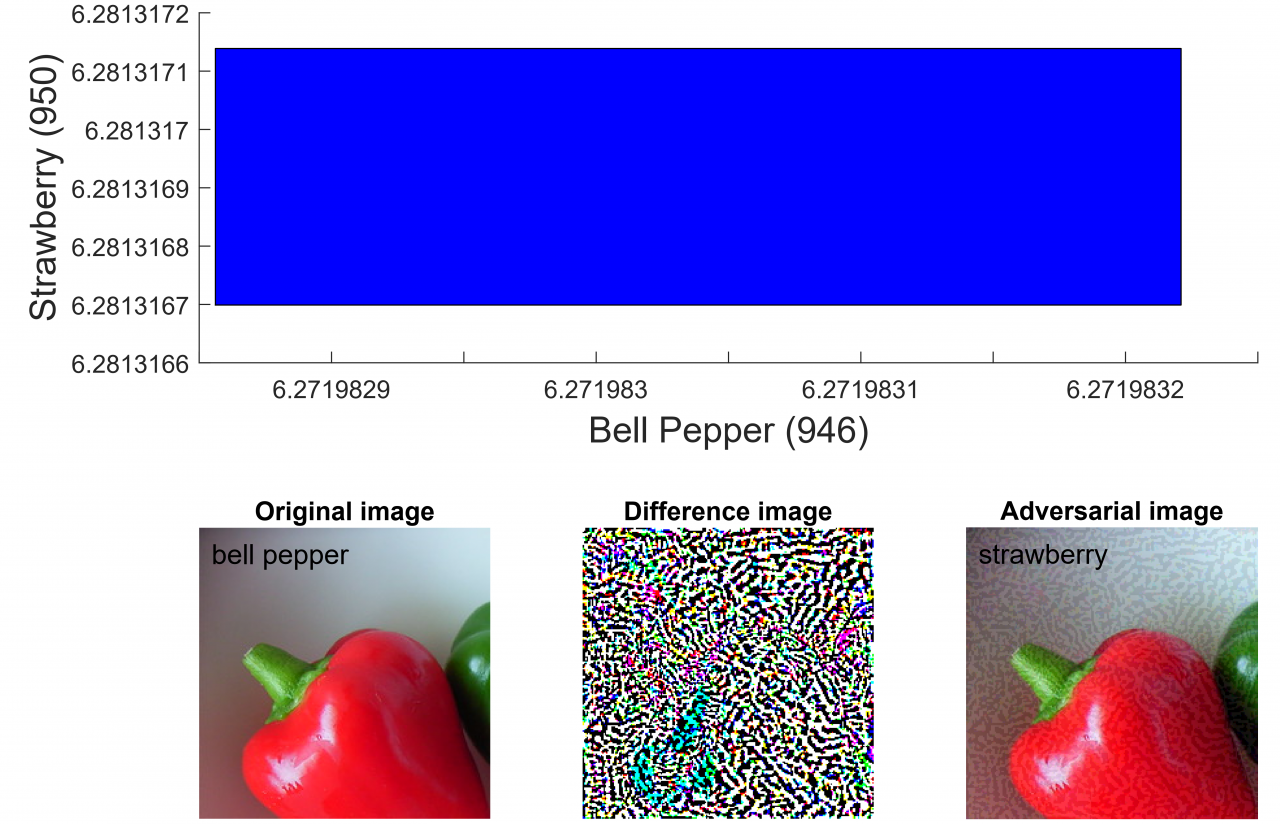

VGG19 exact output ranges corresponding to FGSM adversarial attack

nnv generates counter examples for VGG19 corresponding to FGSM adversarial attack

- NNV can compute and visualize the exact reachable sets of feedforward neural networks with ReLU/Saturation activation functions. The computation can be accelerated using parallel computing in Matlab. The computed reachable set can be used for safety verification of the networks.

- NNV can construct and visualize the complete counter inputs of feedforward neural networks with ReLU/Saturation activation functions.

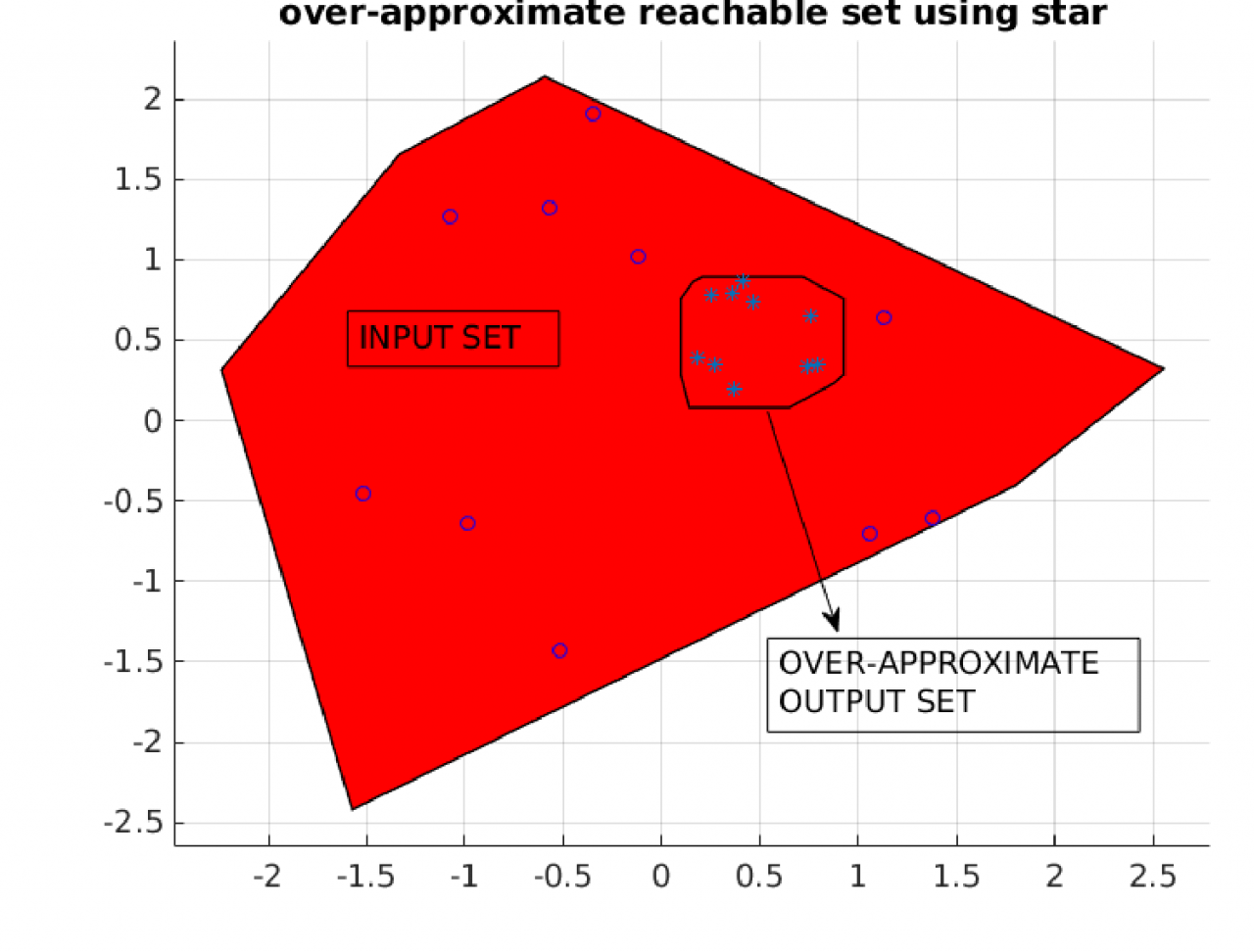

- NNV can compute and visualize the over-approximate reachable sets of feedforward neural networks with Tanh, Sigmoid activation functions.

- NNV can compute and visualize the reachable sets of neural network control systems, i.e., systems with plant + neural network controllers which can be used to verify or falsify safety properties of the systems.

- New feature: Our nnv now supports robustness verification for very large convolutional neural networks such as VGG16, VGG19 under adversarial attacks (FGSM, DeepFoll, etc.)

This work is supported in part by the DARPA Assured Autonomy program.

ORGANIZATION

Vanderbilt University, Nashville, TN, USA